Imagine asking your favourite artificial intelligence tool a quick question or getting an instant portrait generated by a digital artist powered by AI. You might think it’s all happening magically in the digital ether, but there’s a hidden bill being racked up every time you type, swipe, or speak. The real cost of AI intelligence lies not just in groundbreaking algorithms or engineering efforts—it’s in the literal energy surging through colossal data centres and the emissions silently billowing into our skies.

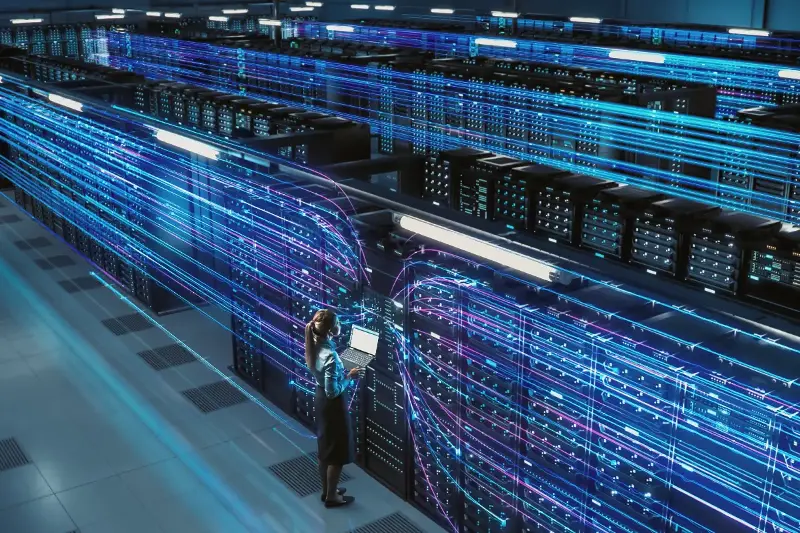

The Unseen Power Surge: What It Takes to Run the Mind of a Machine

Large language models—the backbone of today’s most impressive AI—are ravenous for computing power. Training GPT-4, for example, is estimated to have consumed hundreds of megawatt-hours of electricity, enough to power a small town for weeks. But that’s only the start. Every time you ask ChatGPT for advice or let your smartphone AI tweak your photos, servers somewhere spin up, drawing energy in amounts that accumulate shockingly quickly.

Think about this: A single Google search was once likened to the energy needed for a lightbulb to burn for a few seconds. In contrast, querying cutting-edge AI can require several times more power, especially for image or video generation. Multiply this by billions of users globally, and the numbers become hard to fathom.

The Carbon Footprint You Never Considered

All that electricity has to come from somewhere. Unless fully powered by renewables, most data centres lean on a mix of fossil fuels and cleaner energy sources—meaning every AI interaction quietly creates a pulse of carbon emissions.

Some recent figures highlight the stark reality:

- Training one large AI model: Roughly the emissions of five cars over their entire lifetimes.

- Running an AI chatbot for a year: Comparable, in some scenarios, to the yearly emissions of several homes.

- High-demand periods: Data centre energy spikes can strain grids, especially in regions where renewable integration lags behind.

It’s sobering to realise that the world’s digital intelligence is fuelling real-world climate changes, one query at a time.

Why Bigger Isn’t Always Better in Tech’s Race

Faster, more “intelligent” AI models aren’t just smarter—they’re almost always hungrier for resources. Tech giants have begun competing over model size, often boasting billions or even trillions of data points. But as these models balloon in complexity, their operational needs do too.

Let’s break it down:

- Model Training: The initial learning process, usually done once, is immensely energy-intensive and concentrated over days or weeks.

- Model Inference: The day-to-day running—when you interact with the AI. Not as power-hungry per interaction as training, but when multiplied across the millions using AI daily, the ongoing cost becomes gargantuan.

- Cooling and Maintenance: Keeping machines running at optimal temperatures in massive, humming server farms requires yet more energy.

So, while AI pushes boundaries, each evolutionary leap leaves a heavier carbon footprint unless actively managed.

Sneaky Contributors: How AI’s Appetite Is Everywhere

It’s not just text and chatbots. AI powers everything from recommended TV shows to traffic flows in cities. The more our world becomes “smart,” the more we unwittingly invite a growing energy appetite into our daily routines.

- Voice assistants constantly “listening” on standby.

- Automated image tagging and facial recognition on social media.

- Real-time navigation and language translation apps.

Even seemingly minor conveniences can cascade into significant global energy draws when adopted at scale.

Tackling the Digital Dilemma: Can We Make AI Greener?

The future isn’t all doom and gloom. Awareness of AI’s environmental cost is prompting researchers and companies to act:

- Prioritising energy-efficient algorithms and chip designs.

- Siting data centres near renewable energy sources.

- Recycling server heat for district heating in innovative cities.

- Adopting carbon offsets or, more ambitiously, investing in direct carbon capture technologies.

Consumers, too, can play a role by demanding transparency—asking companies how “green” their AI really is and supporting services with demonstrated environmental commitments.

As you marvel at the next AI breakthrough or command your digital assistant to play your favourite song, pause and ponder: How much power did that really use? And what might a smarter, greener future for AI look like when every interaction counts? The energy shaping tomorrow’s intelligence is as real as the insights it provides—will we learn to balance the two before it’s too late?