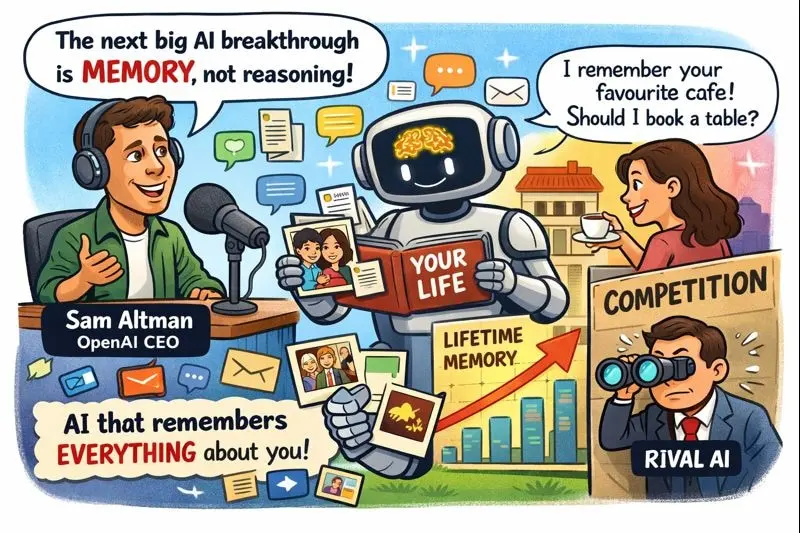

OpenAI chief executive Sam Altman has suggested that the next major leap in artificial intelligence will not come from sharper reasoning abilities, but from something far more fundamental: memory.

Speaking on a recent podcast with technology journalist Alex Kantrowitz, Altman outlined a future in which AI systems are able to retain and learn from vast amounts of personal data across an individual’s lifetime, fundamentally reshaping the idea of a digital personal assistant.

Memory, not reasoning, as the next leap

According to Altman, today’s AI tools are already strong at reasoning tasks, but they fall short when it comes to long-term recall. Humans, he argued, remain the best personal assistants because they understand context and nuance, yet they struggle to remember details consistently.

AI, on the other hand, does not have that limitation. Altman said the real opportunity lies in building systems that can remember everything a user has shared over time, from conversations and emails to documents and preferences. Such persistent memory could allow AI to identify patterns and needs that people themselves may never consciously articulate.

He indicated that OpenAI is actively working towards this vision, suggesting that more advanced, memory-driven personal assistants could begin to emerge as early as 2026.

A shift in what ‘personal assistant’ means

Altman described persistent memory as a turning point for consumer AI. Rather than responding to isolated prompts, future systems could offer deeply personalised results, shaped by years of accumulated context.

In his view, this would move AI from being a reactive tool to a proactive assistant, capable of anticipating needs and tailoring responses with a level of precision that current systems cannot achieve.

The discussion formed part of a broader conversation about OpenAI’s long-term strategy, which also touched on infrastructure expansion, AI-powered devices and the company’s ambition to eventually reach artificial general intelligence.

‘Code red’ and rising competition

During the podcast, Altman also addressed reports of internal “code red” alerts at OpenAI, triggered by intensifying competition in the AI sector. He framed these moments not as panic, but as a deliberate strategy to stay alert and responsive.

He suggested that being cautious and moving quickly when a credible threat appears is healthy for the organisation. Similar situations, he noted, have occurred before, including earlier this year when new competitors such as DeepSeek gained attention.

Altman added that such internal alarms are likely to become a recurring feature, potentially happening once or twice a year, as OpenAI seeks to maintain its position in a fast-moving market. While he stressed the importance of winning in its core space, he also acknowledged that other companies can succeed alongside OpenAI.

A festive surprise for ChatGPT users

Separately, Altman has sparked online curiosity with a festive surprise for ChatGPT users. Over the weekend, he posted a cryptic message on X that encouraged people to interact with the chatbot using a single emoji.

Users soon discovered that sending a gift emoji to ChatGPT unlocks a hidden Christmas-themed interaction. The chatbot responds by prompting users to upload or take a selfie, after which the request is handed off to Sora, OpenAI’s video generation tool.

Sora then creates a short, personalised Christmas video. The festive message is tailored using details ChatGPT has learned from a user’s previous interactions, offering a glimpse of the personalised experiences that more advanced AI memory could eventually make routine.